State Representation and Polyomino Placement for the Game Patchwork DRAFT

The paper State Representation and Polyomino Placement for the Game Patchwork by Mikael Z. Lagerkvist is presented at the 18th workshop on Constraint Modelling and Reformulation at The 25th International Conference on Principles and Practice of Constraint Programming, CP2019 in Stamford, CT, USA. This post summarizes the paper and gives some context on it.

The paper is available on my research page as is the presentation.

Why study Patchwork?

Since the research for last years paper on Kingdomino, I’ve been quite interested in games and game playing agents. Patchwork is one of my favorite games, and I felt it would be nice to try and write some agents for it.

While implementing the game playing agents, I realized that the constraint programming model for the placement problem turned out to be quite interesting, so I wrote a paper about it.

Patchwork

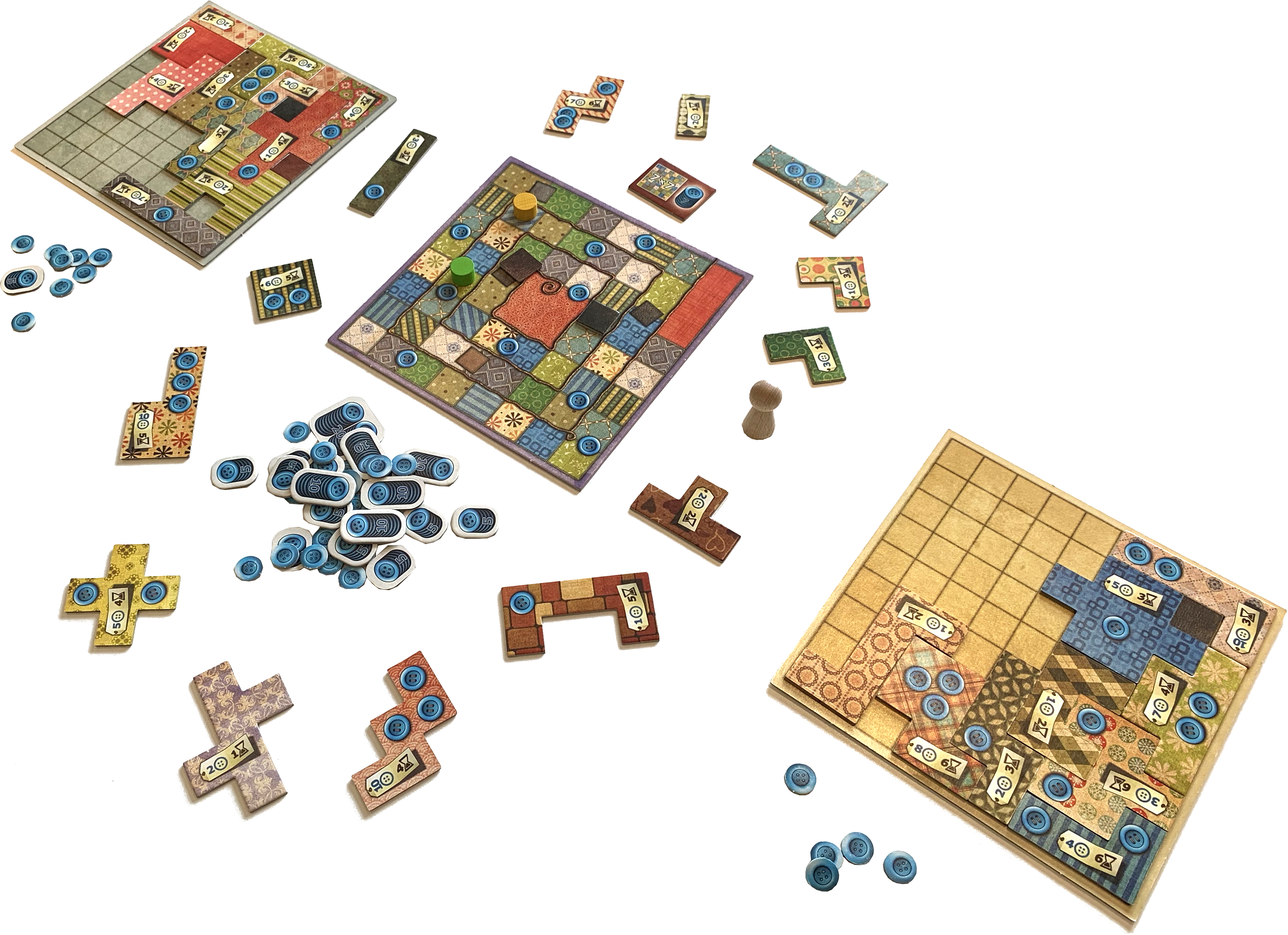

Patchwork by Uwe Rosenberg is a 2-player strategy game that uses tile drafting and polyomino placement. It is in 2019, five years after the release of the game, in the top 100 games on Bard Game Geek.

Rules of the game

The game consists of 33 polyominoes called patches, a marker, currency markers called buttons, two player tokens, two 9 by 9 boards (one per player) where patches are to be placed, and a central time board. Each patch has a button cost, a time cost, and between 0 and 3 buttons for income. The time board has 53 steps in total. Five of the steps have a special 1 by 1 patch, and 9 steps are marked with a button for income.

At the start of the game, the patches are organized in a circle, with a marker after the smallest patch. The player tokens are at the start of the time board, and each player has an empty board and 5 buttons.

In each turn, the player whose marker is the furthest back gets to move (if both players are at the same place, the last to arrive there gets to play). When making a move, a player may either advance their time marker to the step after the other player or buy and place a patch. Advancing the time marker to the step after the opponents time token is always possible, and gives the number of steps in button income.

To buy and place a patch, the player may choose one of the three next patches in the circle. The player must pay the indicated number of buttons on the patch (between 0 and 10) and place the patch on their board. The marker is moved to the place of the bought patch, and the player token is moved the number of steps indicated on the patch (between 1 and 6). To buy a patch, the player must have sufficient funds and the ability to place the patch on their board.

If the player when advancing passes a step marked with a button on the time board, they collect new buttons based on the number of income buttons on the patches they have placed. If the player is the first to pass one of the special 1 by 1 patches on the time board, they get the patch and place it on their board immediately.

The game ends when both players have reached the center of the time board. The final score is the buttons they have acquired, minus two for each uncovered square on the board. The first player (if any) that filled a complete 7 by 7 area of their board gets an extra 7 points.

Properties of patchwork

While Patchwork is a in one sense a classic deterministic finite 2-player game with full information, it has some aspects which are slightly unusual.

The game is fairly short, just around 23 plies experimentally. On the other hand, it has quite a large branching factor (around 83, also experimentally). A large part of this comes from the large number of possibilities for placing a patch.

The game does not alternate between the players each turn, the player that is furthest back on the time board is the next one to make a move. This introduces some interesting aspects in planning since some choices in some situations can lead to double (or even triple) moves for a player.

Game State and Constraint Programming

Classical board games such as chess have fairly simple game states, where a lot of effort has been spent optimizing the representation. In contrast, there are very many modern board games, and they typically have much more complex game states. This combination of more games to implement and more complexity means that it is desirable to find ways to simplify the game state implementation.

In this paper, constraint programming was used to represent the players boards with polyominoes. The game state is comprised of common information for the players positions on the time board, their current buttons, the list of patches, and the next player. In addition, two constraint programming models/states are kept, one for each board.

The basic inspiration for the model was from a paper and model I wrote 10 years ago with Gilles Pesant. The original model uses regular expressions for modeling tight polyomino placements problems such as Pentominoes, as well as a slightly extended that could be used to solve solitaire battleships. For Patchwork, I extended the basic model with the following ways

- Control of rotation. The original model used DFA minimization for handling rotations. The new model computes the unique rotations at the start, and adds control variables that indicate which rotation is used.

- Reified placement. Since not all polyominoes are placed, the placement needs to be reified. The solution is to have a control variable selecting between the regular expression with the placement, and an expression indicating no placement

- Usage. Additional constraints that model the usage of a patch is added, also using regular expressions. They use rotation and reification control variables as well.

One nice usage of the constraint model is that when all previous parts have been placed and the results have been propagated, the control variables will (since the regular constraint is domain consistent) indicate which patches can be placed.

The full details are available in the paper.

Strategies for Placing Polyominoes

It is not enough to have a model of the problem, during the game we also need to place patches. The context here is quite different from normal constraint programming search, where one typically searches to find an assignment to all the variables. Instead, we just want to find one or more possible placements of the patch we have chosen.

I defined a Strategy as something that chooses a good placement for a patch. The main idea here is to split the strategy into two parts, a placement policy and an evaluation. The policy finds one or more placements of a patch, while an evaluation chooses among the possibilities generated by the policy. Inspiration for placement policies have been taken from classical packing literature.

Placement policies

The perhaps most well-known placement policy in packing literature is the Bottom-Left policy, which tries to place a polygon in the bottom-most position, and among all the bottom-most positions, choose the one that is left-most. I generalized thisin a couple of ways, including choosing all bottom-most positions for all possible left-positions creating a sort of pareto-front.

In addition, several CP heuristics are used to find placements, both standard such as input order and smallest domain/first-fail, as well as some learning heuristics such as accumulated failure count over domain size etc.

On top of the basic placement policies, a “meta-policy” wrapping another policy was defined, which first chooses all the possible rotations of the parts, and then runs the wrapped policy.

Evaluations

Choosing among different potential placements is crucial. There are many natural ways to choose placements, such as left-most, bottom-most, and least bounding box.

The main evaluation introduced is called global propagation guided regret, and uses the result of propagation to guide the placement. In contrast to ideas such as impact based search, here we choose a placement that gives the least amount of propagation. The idea is to not rule out later placements, since we have to commit to a placement when making it.

Experiments

In total 119 combinations was tested on 1000 randomly generated packing problems with the Patchwork patches. The most important result is that the global propagation guided regret is the most important choice to get good results, and the more candidates it has to choose from, the better. The reverse of global propagation guided regret was the worst evaluation as a verification.

The placement policies inspired by classical packing literature were in general better than the ones using CP-style heuristics. The choice mostly boils down to how much time is allowed for the strategy. One very interesting counter-point is that the very simple input-order variable ordering is quite effective. This is because it implements a kind of bottom-left placement.

Implementation

THe implementation is done in C++17 and uses the Gecode constraint programming system. The implementation is available on GitHub.

Reflections

Implementing game playing agents is a lot of fun, and I find the area interesting. Using high-level modeling with constraint programming significantly simplified implementing the game state for Patchwork. Naturally, it would be possible to implement manually also, but that would be a lot more work.

The paper only presented experiments and details on the actual model. Naturally, it would be quite interesting to also implement an actual game tree search using the game state. To get reasonable performance, the code searching for placements will probably need to be optimized so that it doesn’t create unnecessary clones of the search spaces; almost all placements will succeed without any failures.